Meta's new AI deepfake strategy: Increased labeling, reduced content removal

Meta has unveiled updates to its guidelines regarding AI-generated content and manipulated media in response to feedback from its Oversight Board. Starting the upcoming month, Meta plans to attach a "Made with AI" badge to a broader array of content, including deepfakes, to alert users to the AI involvement. It will also provide extra context for content altered in ways that could significantly mislead the public on critical issues.

This initiative may result in a higher volume of content being labeled, especially in a year teeming with elections globally. However, Meta will only label deepfakes that meet "industry standard AI image indicators" or when the content uploader acknowledges its AI-generated nature. AI-generated content not fitting these criteria might not be labeled.

Meta is opting for a strategy that leans more toward transparency and context rather than removal for AI-generated and manipulated media. This approach likely means that more such content will stay on platforms like Facebook and Instagram, marked with labels instead of being taken down.

Another significant shift is that Meta will discontinue the removal of content purely based on its manipulated video policy starting in July. This change comes as Meta navigates the complex terrain of content moderation in light of legal challenges and regulatory demands, such as the European Union's Digital Services Act.

Feedback from Meta's Oversight Board has spurred these policy updates. The Board had called for a reevaluation of Meta's strategy towards AI-generated content, highlighting the need for a more inclusive approach that captures the evolving nature of AI-generated media beyond just videos. As a result, Meta is working towards refining its labeling process, incorporating a "Made with AI" label not just for videos but also for audio and images that are AI-generated or significantly altered. This more comprehensive labeling strategy aims to better inform the public and provide context, without outright removing manipulated content that doesn't violate other community standards.

Why Meta is looking to the fediverse as the future for social media

Why Meta is looking to the fediverse as the future for social media Microsoft’s Surface and Xbox hardware revenues take a big hit in Q3

Microsoft’s Surface and Xbox hardware revenues take a big hit in Q3 Augment, a competitor of GitHub Copilot and backed by Eric Schmidt, emerges from stealth mode with a launch of $252 million

Augment, a competitor of GitHub Copilot and backed by Eric Schmidt, emerges from stealth mode with a launch of $252 million IBM advances further into hybrid cloud management with its $6.4 billion acquisition of HashiCorp

IBM advances further into hybrid cloud management with its $6.4 billion acquisition of HashiCorp Perplexity is raising over $250 million at a valuation of between $2.5 billion and $3 billion for its AI search platform, according to sources.

Perplexity is raising over $250 million at a valuation of between $2.5 billion and $3 billion for its AI search platform, according to sources. Apple announces May 7 event for new iPads

Apple announces May 7 event for new iPads Gurman: iOS 18 AI features to be powered by entirely On-Device LLM, offering privacy and speed benefits

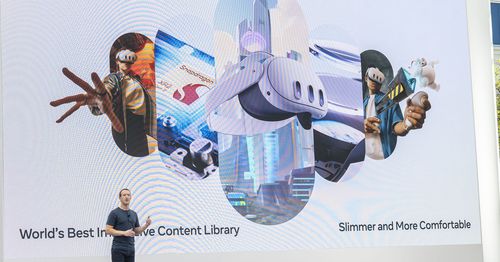

Gurman: iOS 18 AI features to be powered by entirely On-Device LLM, offering privacy and speed benefits Meta aims to become the Microsoft of headsets

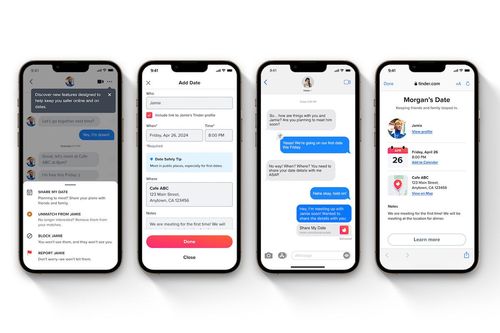

Meta aims to become the Microsoft of headsets Tinder introduces a 'Share My Date' feature allowing users to share their date plans with interested friends

Tinder introduces a 'Share My Date' feature allowing users to share their date plans with interested friends This is Tesla's effective solution for the recalled Cybertruck accelerator pedals

This is Tesla's effective solution for the recalled Cybertruck accelerator pedals