Too many AI models?

Are there too many AI models being created? It feels like it, especially when we're getting about 10 new ones every single week. This rapid introduction of models makes it hard to compare them to one another, a task that wasn't easy to begin with. Why is this happening, though? Right now, we're in a unique phase of AI development, where both large and small AI models are being developed at a rapid pace by a wide range of creators, from individual developers to large, well-funded teams.

This week alone has seen a fascinating variety of models. For instance, Meta has launched LLaMa-3, touted as an "open" large language model, while a French team has introduced Mistral 8x22, pulling back on their open-source promises. Then, there's Stable Diffusion 3 Turbo, Adobe's AI Assistant for Acrobat, and several more, each bringing something unique to the table. The sheer number, including tools for AI development like torchtune and Glaze 2.0, makes it clear this isn't slowing down anytime soon.

- LLaMa-3: Meta's newest large language model, labeled "open," though its openness is currently debated. It is popular among users.

- Mistral 8×22: A large model known as a "mixture of experts," produced by a French company that has recently become less open.

- Stable Diffusion 3 Turbo: An enhancement of SD3, from Stability, introducing a new API. The use of "turbo" mirrors naming from OpenAI.

- Adobe Acrobat AI Assistant: Adobe introduces a feature to interact with documents, likely a ChatGPT-based service.

- Reka Core: A new multimodal model by a team previously part of a major AI company, aiming to compete with larger models.

- Idefics2: A more open multimodal model, incorporating recent smaller models from Mistral and Google.

- OLMo-1.7-7B: A larger version of AI2's LLM, among the most open models available, preparing for future large-scale models.

- Pile-T5: An improved version of the T5 model, fine-tuned on the code database Pile.

- Cohere Compass: An embedding model that handles multiple data types, designed for broader use cases.

- Imagine Flash: Meta's latest image generation model, using a new method to speed up diffusion while maintaining quality.

- Limitless: A personalized AI across web, macOS, Windows, and wearable platforms, powered by your interactions.

But what does this mean for the future of AI? While it's hard to track every new model, their gradual improvements are what fuel progress in this field. Just like the automotive industry has evolved with countless models, the AI sector is diversifying. Each new model might not be a revolutionary step forward on its own, but together, these models are vital for the advancement of AI technology. We're committed to highlighting the most significant models, especially for those keen on machine learning advancements. When a truly groundbreaking model emerges, it's bound to stand out.

Why Meta is looking to the fediverse as the future for social media

Why Meta is looking to the fediverse as the future for social media Microsoft’s Surface and Xbox hardware revenues take a big hit in Q3

Microsoft’s Surface and Xbox hardware revenues take a big hit in Q3 Augment, a competitor of GitHub Copilot and backed by Eric Schmidt, emerges from stealth mode with a launch of $252 million

Augment, a competitor of GitHub Copilot and backed by Eric Schmidt, emerges from stealth mode with a launch of $252 million IBM advances further into hybrid cloud management with its $6.4 billion acquisition of HashiCorp

IBM advances further into hybrid cloud management with its $6.4 billion acquisition of HashiCorp Perplexity is raising over $250 million at a valuation of between $2.5 billion and $3 billion for its AI search platform, according to sources.

Perplexity is raising over $250 million at a valuation of between $2.5 billion and $3 billion for its AI search platform, according to sources. Apple announces May 7 event for new iPads

Apple announces May 7 event for new iPads Gurman: iOS 18 AI features to be powered by entirely On-Device LLM, offering privacy and speed benefits

Gurman: iOS 18 AI features to be powered by entirely On-Device LLM, offering privacy and speed benefits Meta aims to become the Microsoft of headsets

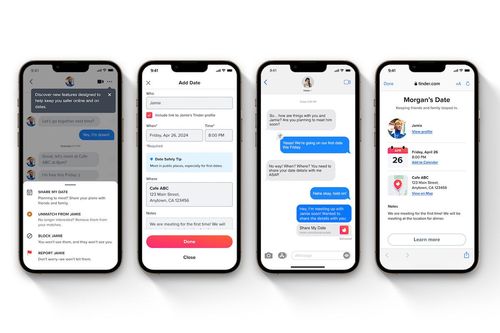

Meta aims to become the Microsoft of headsets Tinder introduces a 'Share My Date' feature allowing users to share their date plans with interested friends

Tinder introduces a 'Share My Date' feature allowing users to share their date plans with interested friends This is Tesla's effective solution for the recalled Cybertruck accelerator pedals

This is Tesla's effective solution for the recalled Cybertruck accelerator pedals